3 critical gaps to address in lifecycle management of AI in MedTech

AI/ML applications in MedTech are rising exponentially. However, key gaps remain in governance and lifecycle management of AI systems to support rapid innovation.

Note: Abhinavdutt Singh contributed to this guest article.

As AI capabilities surge across healthcare, particularly in Software as a Medical Device (SaMD), governance mechanisms are struggling to keep pace. Despite frameworks like the EU AI Act1, FDA’s Total Product Lifecycle (TPLC) model2, and ISO/IEC 420013 emerging, systemic challenges persist. Governance in AI SaMD isn’t just about regulatory box-checking it’s about safeguarding patients and ensuring product reliability through design.

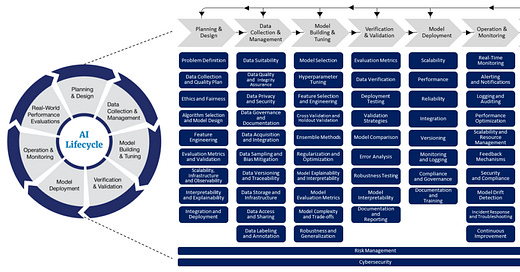

In a recent lifecycle management approach4, FDA recognizes the challenge of ensuring safety and effective of AI-enabled applications in healthcare due to their inherent ability to continuously learn and adapt to the ever-changing use environment. Therefore, it is very important to clearly understand different stages in the lifecycle of an AI-enabled SaMD and align them to key processes of the software development process. Further, it is critical to efficiently integrate governance of this process with the broader Quality Management System to ensure a unified approach to identifying and managing non-conformances and emerging risks.

The conceptual framework for an LCM model for AI-enabled SaMD appears simple and intuitive. Yet, there there are significant practical hurdles in developing an efficient governance model to support rapid innovation and continued safety and effectiveness of healthcare applications in an increasingly complex and dynamic environment.

Here are three critical gaps that should be addressed in the near term.

1. Traceability of AI updates

One major governance gap lies in the traceability of AI model updates. The Class I recall of Tandem Diabetes Care’s t:connect mobile app, though not AI-powered, demonstrates how an operating system update, if unchecked, can compromise patient safety. If this had involved a self-learning AI, unmonitored drift could have resulted in unexplainable outcomes or misdiagnosis. The FDA’s Predetermined Change Control Plan (PCCP) framework offers guidance, but the operational traceability from algorithm change to clinical impact remains fragmented across many organizations.

2. Mismatch in risk classifications

Many AI-based systems are classified using traditional static risk models for regulatory purposes —despite the adaptive nature of AI. The FDA’s SaMD Action Plan5 and IMDRF emphasize this misalignment. For instance, an adaptive triage algorithm that re-prioritizes patients based on learned patterns might be initially classified as low-risk premarket but could escalate risk post-deployment without appropriate monitoring. Over time, this application could influence critical decisions as it adapts to real-world patient data.

Teams must shift toward dynamic risk frameworks that evolve with model behavior, especially under real-world data conditions. We can no longer treat risk assessments as a one-and-done exercise. Instead, organizations should implement ongoing surveillance to monitor model data drift, biases, etc and regulatory adaptability. Collaboration between regulators and developers is essential to keep governance responsive to real-world AI behavior.

3. Disconnect between training data and clinical claims

Many AI-based SaMD systems lack traceability between training data, clinical claims, and real-world outcomes, creating gaps in safety and regulatory compliance.

For example, dermatology AI's trained predominantly on light skin tones cannot generalize to diverse populations. IEC 62304 and ISO/IEC TR 5467 require that design inputs reflect the intended use population and context. Models trained on narrow modalities such as RadImageNet-based tools that underperform outside of chest X-rays violate IEC 82304-1 safety and performance principles.

Real-world studies (e.g., PMC105464436) confirm that models often fail in live settings due to unmonitored drift. ISO/TR 20416 mandates that such insights feed back into model updates and ongoing evaluation.

TopflightApps and peer-reviewed literature highlight another blind spot: the failure to link training data provenance with clinical performance claims and post-market surveillance. A model trained on narrow datasets may underperform across diverse patient populations, affecting safety and equity. Closing this gap requires integrated evidence management, connecting training datasets, performance metrics, and post-market real-world data through continuous monitoring.

Next steps: From compliance to governance by design

To ensure AI-SaMDs remain safe, effective, and equitable, developers must move beyond static documentation toward lifecycle-based AI governance. By linking data provenance, adaptive risk controls, and real-world performance within a unified QMS, we can support innovation while protecting patients.

Compliance should not follow development, it must lead it.

Questions to consider

How is your organization implementing governance strategies beyond documentation?

How are you integrating enterprise-wide AI governance with your Quality Management System?

Are you thinking about a lifecycle management approach to development, deployment and maintenance of AI-enabled innovations?

Are your teams trained and equipped to oversee policy-aligned AI SaMD/SiMD development?

Let’s move beyond guidance into implementation—together. Please share your comments and insights below. Let us continue the conversation!

About Abhivandutt Singh

Abhinavdutt Singh is a seasoned Software Quality & Systems Engineering Leader with 10 years of experience in regulated medical device industries, specializing in SaMD, AI/ML Clinical Decision Support (CDS), robotics, and digital health compliance. He currently serves as a Staff Software Quality Engineer, where he leads risk assessments, CAPA investigations, and digital cloud software deployment readiness for Class II devices.

Abhinav is a contributing author to OWASP’s AI Maturity Model and Cyber Threat Intelligence Guidance, a collaborator on IEEE healthcare data standards, and a key contributor to ForHumanity’s EU AI Act audit schemes. He holds the ASQ CMQ/OE certification, is a frequent conference speaker, and is widely recognized as an advocate for AI governance and regulatory innovation in global health.

FDA: Total Product Lifecycle for Medical Devices, accessed May 26, 2025.

ISO/IEC 42001:2024: Information technology - Artificial intelligence - Management System.

FDA: A lifecycle management approach toward delivering safe, effective AI-enabled healthcare, accessed May 26, 2025.

FDA: AI/ML based software as a medical device action plan, January 2021.

Gichoya JW, Thomas K, Celi LA, Safdar N, Banerjee I, Banja JD, Seyyed-Kalantari L, Trivedi H, Purkayastha S. AI pitfalls and what not to do: mitigating bias in AI. Br J Radiol. 2023 Oct;96(1150):20230023.